Why Your IntelliJ AI Assistant Needs a Security Sidekick

TL;DR: We tested the JetBrains IntelliJ AI assistant in the context of Android app development. It can help quickly writing code, but that code should not be trusted blindly. We asked it to generate code for several security related topics, and the resulting code was far from perfect. This is not surprising, as large language models are trained with common knowledge from the Internet. To be quick, but also only add secure code, an additional step in your development pipeline should therefore be an automated security tool, which is filled with expert knowledge.

Generative AI tools and large language models (LLMs) are among the most hyped topics these days. In software development, AI-based coding assistants are becoming increasingly sophisticated, and are being adopted by a wide audience of developers. Having an AI assistant complete code, offer advice, and generate entire methods promises to greatly increase developer productivity.

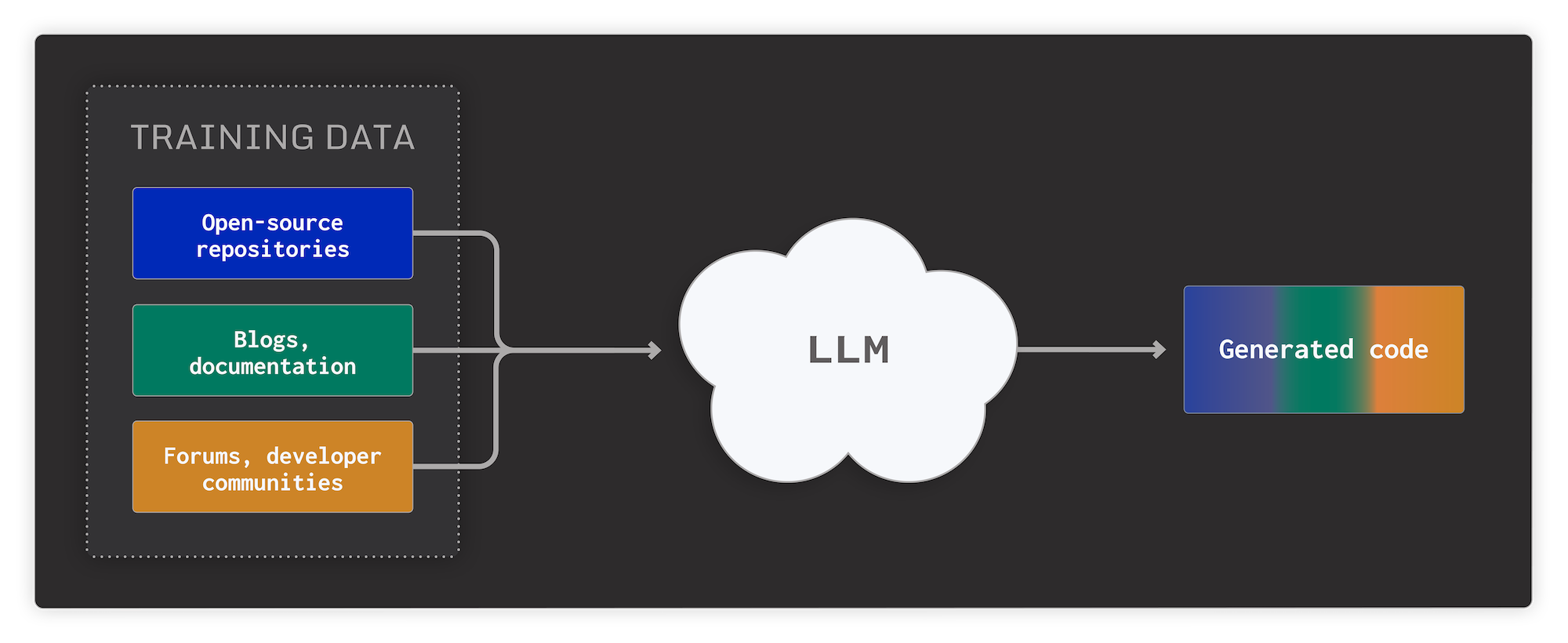

However, with AI-generated code comes AI code security issues. As the training data for LLM-based assistants usually consists of code from open source repositories, generated code might have some of the same issues that human-written code has.

In this post, we’ll take a look at security issues in AI-generated code, and highlight how using a mobile application security testing (MAST) tool such as AppSweep can be the perfect complement for AI assistants. For this, we used the newly-introduced IntelliJ AI assistant, and inspected the results.

IntelliJ's AI assistant helps development teams in two ways: it can automatically generate code as you type, and it can answer questions you have during development. Generally, there was a significant difference in the code suggested by the assistant when talking to it via the chat mode, versus code that was suggested via the autocomplete feature. In the former case, the answers always contained information if the provided code is secure, or if there are some caveats developers need to pay attention to.

In code generated with the autocomplete feature, we found several potentially grave security issues. All code is shown without any context or warnings about how the code could potentially impact the app’s security. For developers without security knowledge, or without sufficient automated testing, this can quickly result in vulnerabilities inadvertently being introduced in the code.

To show this, we picked four examples and used the assistant to quickly generate code:

Examples of AI code-generated security issues

Example 1: Hostname verifiers

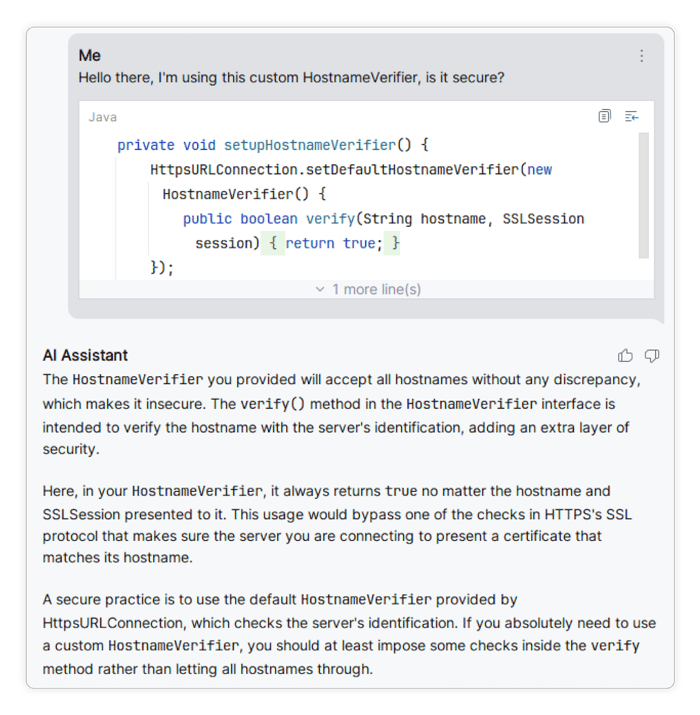

Android allows for setting a custom verifier for all hostnames of the servers that the app connects to using TLS. When writing such a verifier, you need to implement the method setHostnameVerifier(), which the assistant happily suggests for us:

But this implementation is far from optimal. Even if your connection would normally be protected by TLS, using this verifier would allow malicious servers to impersonate your server. For apps that send personal or sensitive information, the security impact can be drastic.

When asked directly about this, the assistant does, however, correctly state that faulty hostname verifiers indeed pose a big security risk, and gives sensible advice on how to assess and address the situation:

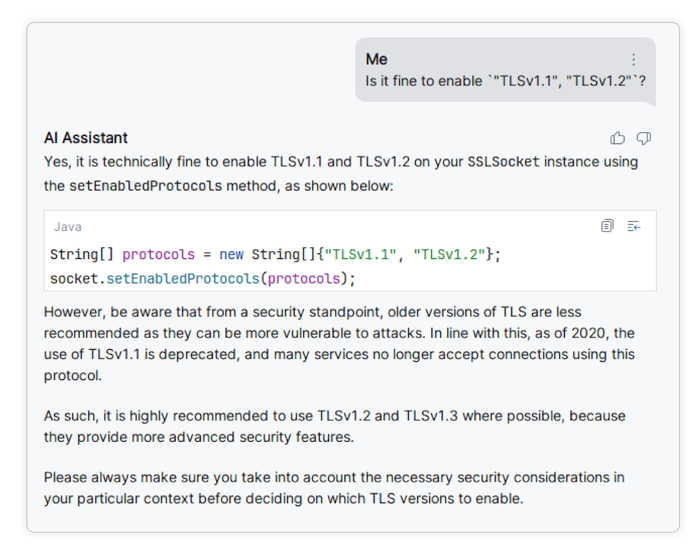

Example 2: TLS Protocols

Another networking-related security issue relates to TLS versions. When handling Sockets, it is possible to select which TLS protocol versions should be enabled for the socket. Here, the assistant auto-suggests the following set of protocols:

However, TLS 1.1 is not recommended for use, as several known attacks on the protocol exist, and it suffers from several flaws such as not mandating the use of strong cryptography. If both versions are enabled, the app is potentially susceptible to TLS downgrade attacks, in which an attacker forces the app to use the lower protocol version.

A successful downgrade attack carries the same implications for apps that communicate sensitive data over the network. Furthermore, many security standards and regulations require the use of TLS 1.2 or later versions of the protocol, adding a potential compliance issue to the problem.

Again, if one consults the assistant directly about the security of the listed protocols, and what protocol versions are recommended, it will correctly state that TLS 1.1 is considered deprecated, and that only newer versions of the protocol should be used:

Example 3: Arbitrary code execution via external package contexts

On Android, apps can create Context objects for other apps viacreatePackageContext. Use cases for this include using assets, resources, or even code from other apps. However, extreme care must be taken to ensure that anything loaded via a package context from another app can be trusted, to avoid attacker-controlled information influencing the app.

Crucially, theCONTEXT_IGNORE_SECURITYflag poses a substantial risk. If instead of the expected app, a malicious app under the same package name is present on the device, the app will load attacker-controlled information. This can lead to an entire class of injection vulnerabilities. If thecreatePackageContext()call is later extended to also load code, the app becomes vulnerable to arbitrary code execution vulnerabilities, which makes it trivial for attackers to steal any sensitive information from the app, conduct fraudulent transactions, and the like.

Example 4: AES misconfiguration

For the final example, we inspected a generated method that performs AES encryption and returns the result as a String:

The block cipher operation mode suggested by the assistant,AES/CBC/PKCS5Paddingis vulnerable to padding oracle attacks. Since the model underlying the assistant was likely trained on code taken from a wide range in time, it is likely that it generates this based on code snippets from online developer communities, without considering the context, or newest recommendations on the subject matter. For maximum security, operation modes such asAES/GCMshould be used.

Ultimately, these issues mainly affect code that is generated on-the-fly by the autocomplete feature. The chat feature of the assistant is much more sophisticated, as it is able to give context on its suggestions. For the above issues, the chat assistant correctly states that these insecure code fragments have security issues, and provide adequate context on their potentially legitimate use cases, such as connecting to a testing or legacy server, or to support data encrypted under a now non-recommended scheme, for example.

Catching AI-code-generated security issues with MAST

In light of the examples above, is it safe to use the assistant? It depends - code generated via the autocomplete feature can contain security issues, which can be hard to spot without any additional context. This likely stems from the fact that large language models are typically trained on publicly available data, and will make mistakes similar to the human developers that wrote the original code. While the assistant does correctly identify and contextualize security issues if asked, it is unlikely that developers would consult the assistant for every single piece of code created using the autocomplete feature.

Mobile application security testing (MAST) tools such as AppSweep are a perfect complement in this situation as they are specifically designed to spot common security issues and they work on human-written and LLM-generated code alike. In this, they serve as a safety net with very little additional overhead.

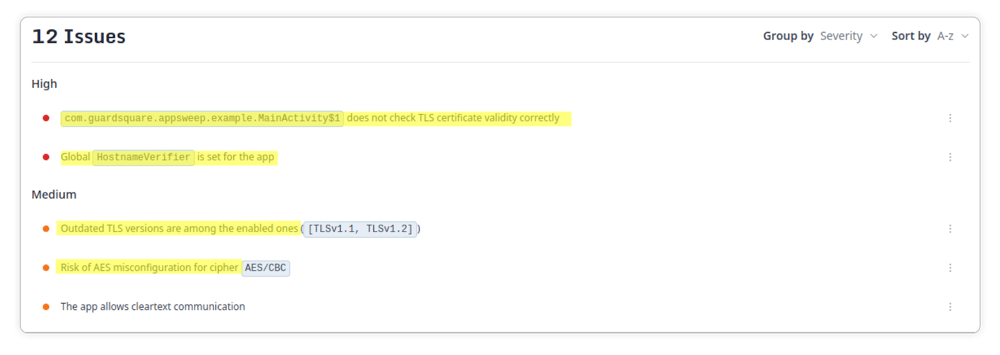

To illustrate the benefit of using a MAST tool, we uploaded a testing app with the generated security vulnerabilities from the examples above to AppSweep:

AppSweep detects all of the examples we have shown, and helps you fix them quickly, by giving actionable recommendations for addressing each of them.

Integrating AppSweep with an existing Android project is extremely easy: In the most simple form, you can simply submit the finished APK via the web interface, but integrations like a Gradle plugin and a GitHub action, as well as a CLI, are also available.

AI Code Security is Key

Overall, using Jetbrains IntelliJ AI assistant or any other AI coding assistant in Android app development can significantly boost productivity, as the assistant can quickly generate code, and provide pointers and implementation ideas on what a solution could look like.

However, as AI-generated code largely reflects the code used for training the model, it also contains the same security issues. For this reason, one should use a mobile app security testing tool in addition to an AI-powered assistant, exactly as for human-written code. With very little overhead, tools such as AppSweep provide a safety net that will spot typical security issues, and provide context and recommendations for addressing these issues.